Improvement Comparison

Not many companies give you actual data on their course's success, but some do, and it's definitely something worth looking at if you're searching for an LSAT course.

One issue you might run into, though, is that different companies might calculate their students' score improvements differently, so we're here to clear away any confusion you might run into in that regard.

And to show off. Mostly to show off.

The Princeton Review

http://www.princetonreview.com

The Princeton Review advertises a 12 point average score improvement for their students. In the fine print, they let you know that this is based only on students that took all six diagnostic tests across the course, which comprised 24% of their students. They also let you know that they use a "first-to-best" approach, whereby their score improvements are calculated based on each student's improvement from their first test to their "best" test (this is our approach as well).

Our approach differs in that our data is based on students that have taken at least four of the tests scheduled throughout the course. We believe that only including students that have taken every single scheduled diagnostic is too restrictive, since we don't believe that missing the occasional diagnostic should be used to completely discount a student's data. As the Princeton Review notes, the proportion of their students that showed up to all six tests was only 24% of students -- that means that their data discounts 76% of their students from the outset. Granted, setting the limit at "four" diagnostics might be somewhat arbitrary, but we believe it to be a reasonable cutoff for effort while allowing us to provide a more reasonably representative statistic: a lower cutoff of four tests allowed us to capture 65% of students that took the course, as opposed to only 24%.

Our approach also differs in that we generally provide our students with an extra (seventh) diagnostic based on the most recently released LSAT, which somewhat complicates things for a direct comparison.

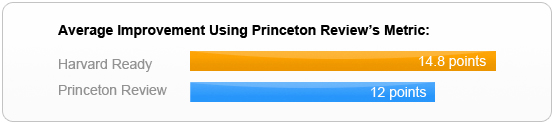

Regardless, you're probably looking for an apples-to-apples comparison, and we have that for you. To do this, we completely excluded the extra 7th diagnostic that we provided our students, and used only the data from students that took every single one of the other scheduled tests. Eerily enough, the proportion of students that fit this criteria was almost exactly the same as Princeton Review's: ~23.5%. The score improvements, however, were not: using Princeton Review's parameters our students saw an average increase of 14.8 points.